I no longer think you should learn to code.

Amjad Masad(CEO of Repit)

I’ve been coding for about 16 years and working professionally for over a decade. After months of testing AI-assisted tools and “vibe coding” in early 2025, I can confidently say: that statement couldn’t be further from the truth.

Sure, LLMs have pulled off some amazing things. And if you don’t know what it really takes to build working software, it’s easy to think the old way is now obsolete.

But trust me, it’s not.

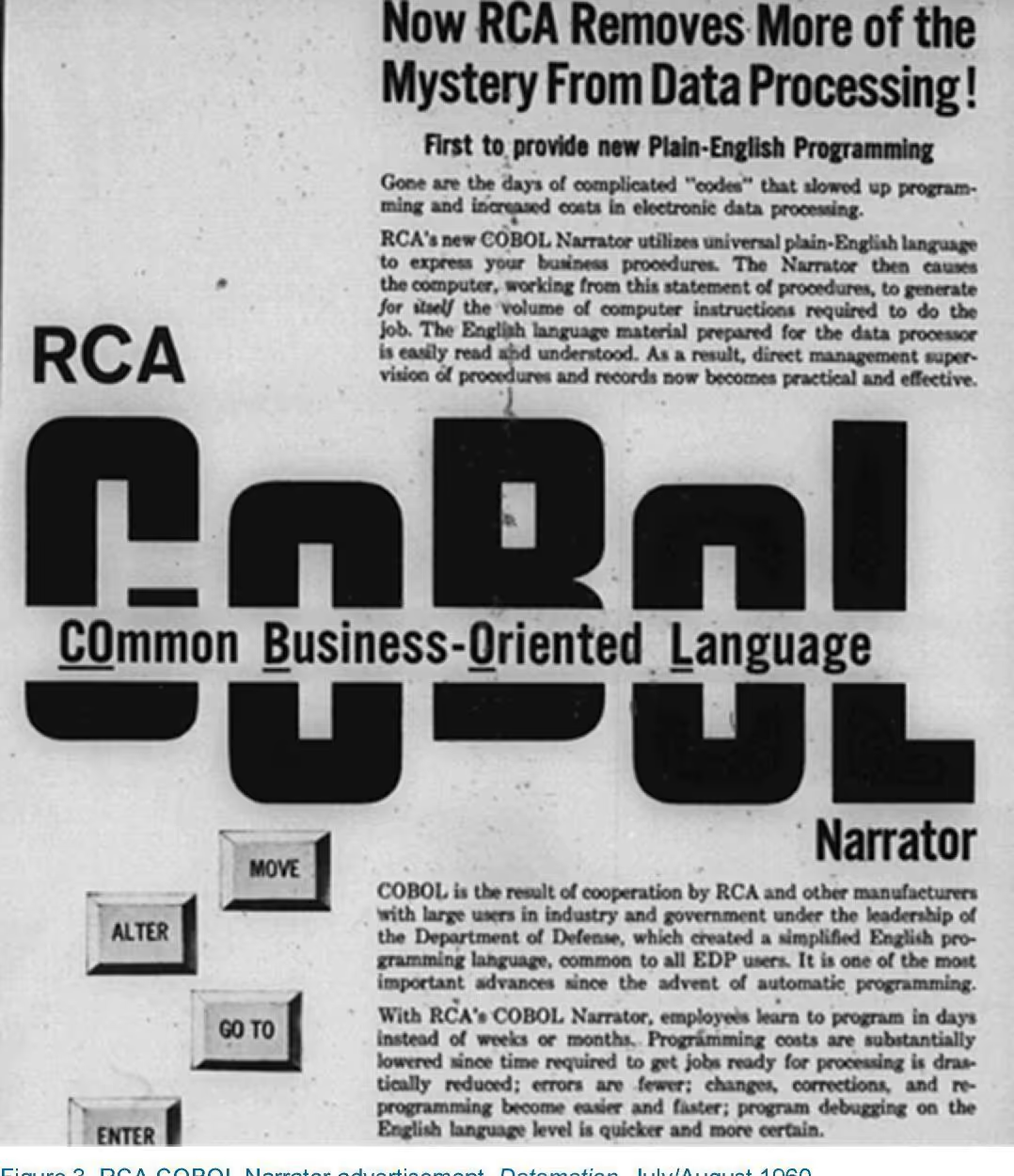

Let’s take a look at things that have happened before, because this is not the first time we face a statement like this; back in the late 50’s - early 60’s COBOL emerged stating that it would allow people without traditional programming backgrounds ( like business professionals, managers, and analysts ) to directly write and understand code. It was designed to be as close to English as possible so that non-developers could set instructions for the computer to follow.

This happened again in the early 90s when GeoCities appeared, followed up by the release of Dreamweaver and up to WordPress a couple of years later making a pretty similar claim: that they will empower regular users to generate what was just code or networking back then.

I'm not saying there's no way this will eventually happen, one day probably, I just don't think we're quite there yet and I don't think we'll be there on the next 3 to 5 years.

My experience with AI

I wanted to write this blog last year when the hype train started to take speed but I figured let's give it more time to get a better picture of what can be achieved with this. So for that I've been actively using AI as a coding assistant for the past 6 months or so, and I’ve found some really interesting things about it.

First of all, no I'm in no way against using AI for coding , I think it is great. If I had to say how much AI code improves my productivity I would say maybe 20-30% and I use both cursor and GitHub copilot with custom instructions for different projects, but what I have experienced doesn't match with what most of the hype is actually about.

Code generated by AI is neither perfect nor complete trash, though it definitely sometimes hits a home run and others, well I go as far as turning off copilot and cursor’s assist because I’m annoyed at how off it gets from what is needed. I've been talking with my team at work as well as other dev friends and we all agree that, while AI is pretty useful, it can be compared to what I can only describe as “a really smart intern… with ADHD and short term memory”. It can come up with pretty good solutions but if you are not careful it has a tendency to “over engineer” and “hallucinate” over the simplest requests and even go ridiculously off topic. Now I’m not saying everything is awful. For example asking Claude 3.7 to write me a function to see if a number is odd or even. I’m using this example because let’s be honest this is one of the simplest thing to do and yet there is a node package… just for this 300k+ downloads a week.

.avif)

Anyway people who over use npm aside, I gave o4-mini-high a really simple prompt:

how can I write a reusable isOdd function to see if a number is odd or even

Honestly what I got as a response was both, a bit over the bare minimum of what I asked for and a tiny bit of an overly engineered response, yes I have to admit this is not the most specific or the best written prompt and it took me just a minute maybe to get most of the answer I was looking for, let me show you how it went:

.avif)

The first part sure, it mostly works and it would sort of do the trick for 90% of the cases, the JSDocs part is a nice to have though that and the examples might be because I often request both when I’m trying out things. My issue starts with the second function. The isEven function is not really needed for this case as we can just call !isOdd(), which is what that function is doing. That is the simplest way I can show how easy it is for the current Generative AI to return a partially or sometimes mostly working functionality along with unsolicited bits that, unless you’re working on something that you won’t need to maintain in the future (like a quick MVP or a hackathon) will just inflate your codebase without a need and add potential points of failure for larger codebases. Honestly, if I see this in a Pull Request I’d ask you to drop the "isEven" function before accepting the changes… and I’d probably ask what the thinking was behind it.

Now here is another example of how things can go out of hand randomly, here I finished implementing a theme selection and theme management functionality for an app I’m building and originally I had the colors in some shared SCSS files for the components along with some rogue colors I set in specific screens. I used Claude 3.7 Sonnet to review the useTheme hook and Provider I created and explain to me how it worked.

It came back with a pretty good explanation of it and some decent understanding of how it worked so my next step was being lazy productive and ask it to modify the colors used in the components (I went module by module not the whole codebase at once) and this was how it went… by the second file

.avif)

Now this was one of those instances in which I simply turned off copilot and did it myself. As you can see it started pretty well it did the first file without an issue and during the second file it started modifying localization, so I paused the job and pointed it out (yes my prompting skills are top notch when coding after working all day). After pointing out the unsolicited updates that were taking place Claude agreed and proceeded to say “oh that’s right let me continue with the theme color update”, to which it immediately went back to “Oh what’s that, I’ll fix a localization issue” (not an issue by the way that is how the integration is intended to work). In the end the module had a total of 3 files and I just needed to update 2 lines of code on each so not really a big deal. Attempting it with Claude after going back and forth for about 15 minutes led me to spend 5 minutes to do it myself.

Now I’ve seen people generate fully working prototypes using Replit or Loveable and to be completely honest I’d say most of what is generated is pretty decent on those platforms. I completely agree it can take us from prototyping straight to implementation in a lot of cases and it works great, even myself when working at the office or personal projects most of the times I bounce ideas of functionalities or needs with the ask mode of copilot and cursor and even got the agent mode on both to generate most of the boilerplate for several things like hooks and new pages. Sure, most of this boiler plating I need to specify in the custom instructions, but I can say without a doubt that having an AI assistant for coding saves me at least 20% to 30% of the time a task would’ve otherwise taken me to complete.

The real problem

one thing that I’ve seen in many developers and as a tendency in general as tech and non-tech companies try to implement an AI first approach is actually perfectly described by Terrible Software’s blogpost, I’ve seen a lot of people in the industry, sometimes even myself, losing that joy and the excitement of solving a problem after a long time trying to figure out which could be the easiest to maintain, most performant possible solution to a problem. For a lot of us as developers and I’m talking about any developer who genuinely enjoys being a developer the thing about building software is not always getting our paycheck, a lot of times the reason why we do this is simply because we really find joy in creating software. I still sometimes decide not to do things the AI way, I put on my headphones, blast some music that will get me in a state of flow and get my coffee ready there is some sort of chemistry that happens inside my brain that just makes me enjoy the problem I’m trying to solve. Let’s go back to the example of the isOdd function, while we can ask AI to generate something there are pieces that simply won’t exist, that essence of the developer and that sense of accomplishment a lot of times are lost, if I were to spend 5 - 10 minutes doing it myself I would write something like this:

/*** Returns true if n is odd

* Returns false if n is even or if there is an error running the function

* @param {number} n

* @returns {boolean}

*/

const isOdd = ({n: number})=> {

try {

const num = Math.abs(n); //We get the absolute value (returns NaN if anything other than a number is passed)

if (isNaN(num)) throw new TypeError('expected a number'); //check if the value is not a number (NaN)

if (!Number.isInteger(num)) throw new TypeError('expected a Integer'); //Check if the number is an integer return n % 2 !== 0;

} catch (err) {

console.error(`[isOdd] an error occured while reviewing 'n' - Error: ${err}`);

return false;

}

}

Again, this is such a simple function, and some times it is the experience that makes you think in different things that may be needed, in my case when I’m developing I always have the console open so I can see things happening so instead of having the program break if I don’t pass a number I’d rather get a log in my console, make it an error so I can notice easily, check for multiple cases that can potentially happen, you know the small things.

As I mentioned before, the joy of crafting something, of going through the full process of translating an idea into a working piece of software, the struggle of figuring out the way of getting things to work the way you want them to or the multiple hours spent trying to debug that one edge case that won’t go away and finishing the day exhausted but happy that you managed to get it all to work is something that can’t be replaced by getting an agent to build something for you.

Another thing I’ve noticed in a lot of people in the dev community that heavily relay in AI is that the critical thinking and the creativity to achieve a mean is getting lost. People that I used to be impressed by because they could create a really well thought, complete database schema and go through the reasoning why every table and every connection is where it is and has the information it has over a couple of beers while in a barbeque in the backyard now look at me and say “I’m not sure how we can build that, maybe we should GPT it and see if it works”.It is not only about productivity, it is about having the ability to differentiate between what is needed and what we are getting.

The main problem I see is, when we outsource the parts of programming that used to challenge us, that demanded our full attention, our creativity, are we also outsourcing the opportunity for growth? for satisfaction? Can we find the same sense of improvement and fulfillment with prompt engineering that we once found in problem-solving through code?

As Bill Gates said a couple of days ago AI won’t be the end of developers, at least I don’t think it will, but I’m sad to acknowledge that it will indeed replace a lot us; If you ask me, those that don’t want to embrace this new tools will be left behind just as much as those who lose their ability to solve problems without the tools. We are in a weird time right now, one where technology can make us better or worse at what we do, but where it takes us is on us to decide. I will continue to code with and without AI, it has been something I’ve loved for years and I want to continue doing so for the years to come, but what has been your experience and where do you think AI will take us in the coming years?